TPC-H on a Raspberry Pi

TL;DR: DuckDB can run all TPC-H queries on a Raspberry Pi 5 board up to the 1,000 GiB dataset.

Update The setup described here can run all TPC-H queries on the SF100, SF300 and SF1,000 datasets with DuckDB v1.2.2 and newer versions. The timings given in this blog post were achieved in January 2025 using DuckDB v1.2-dev.

Introduction

The Raspberry Pi is an initiative to provide affordable and easy-to-program microcomputer boards. The initial model in 2012 had a single CPU core running at 0.7 GHz, 256 MB RAM and an SD card slot, making it a great educational platform. Over time the Raspberry Pi Foundation introduced more and more powerful models. The latest model, the Raspberry Pi 5, has a 2.4 GHz quad-core CPU and – with extra connectors – can even make use of NVMe SSDs for storage.

Last week, the Pi 5 got another upgrade: it can now be purchased with 16 GB RAM. The DuckDB team likes to experiment with DuckDB in unusual setups such as smartphones dipped into dry ice, so we were eager to get our hands on a new Raspberry Pi 5. After all, 16 GB or memory is equivalent to the amount found in the median gaming machine as reported in the 2024 December Steam survey. So, surely, the Pi must be able to handle a few dozen GBs of data, right? We ventured to find out.

Setup

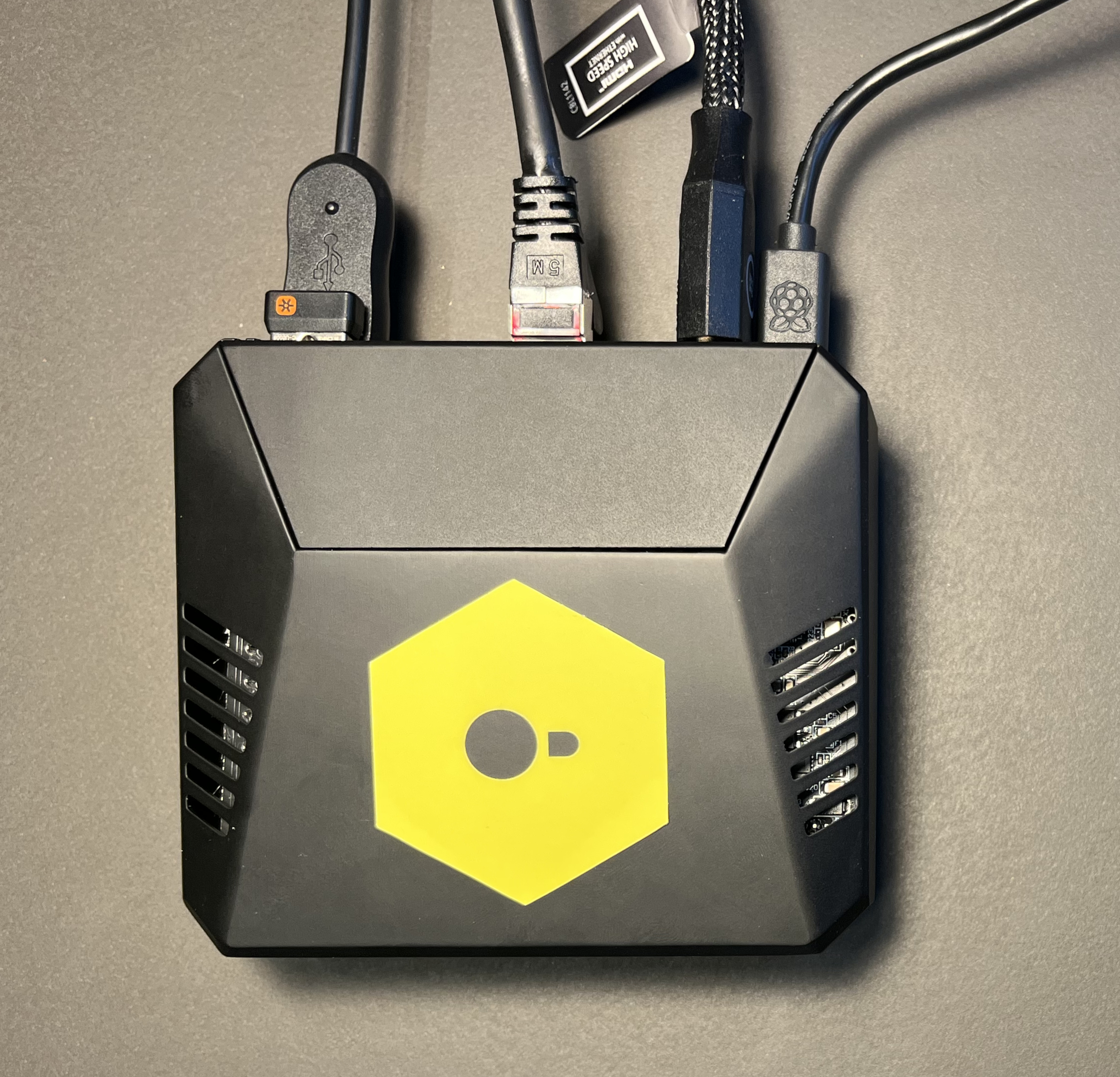

Our setup consisted of the following components, priced at a total of $300:

| Component | Price (USD) |

|---|---|

| Raspberry Pi 5 with 16 GB RAM | 120.00 |

| Raspberry Pi 27 W USB-C power supply | 13.60 |

| Raspberry Pi microSD card (128 GB) | 33.40 |

| Samsung 980 NVMe SSD (1 TB) | 84.00 |

| Argon ONE V3 Case | 49.00 |

| Total | $300.00 |

We installed the heat sinks, popped the SSD into place, and assembled the house. Here is a photo of our machine:

Experiments

So what is this little box capable of? We used the TPC-H workload to find out.

We first updated the Raspberry Pi OS (a fork of Debian Linux) to its latest version, 2024-11-19.

We then compiled DuckDB version 0024e5d4be using the Raspberry Pi build instructions.

To make the queries easy to run, we also included the TPC-H extension in the build:

GEN=ninja BUILD_EXTENSIONS="tpch" make

We then downloaded DuckDB database files containing the TPC-H datasets at different scale factors (SF):

wget https://blobs.duckdb.org/data/tpch-sf100.db

wget https://blobs.duckdb.org/data/tpch-sf300.db

We used two different storage options for both scale factors. For the first run, we stored the database files on the microSD card, which is the storage that most Raspberry Pi setups have. This card works fine for serving the OS and programs, and it can also store DuckDB databases but it's rather slow, especially for write-intensive operations, which include spilling to disk. Therefore, for the second run, we placed the database files on the 1 TB NVMe SSD disk.

For the measurements, we used our tpch-queries.sql script, which performs a cold run of all TPC-H queries, from Q1 to Q22.

tpch-queries.sql

PRAGMA version;

SET enable_progress_bar = false;

LOAD tpch;

.timer on

PRAGMA tpch(1);

PRAGMA tpch(2);

PRAGMA tpch(3);

PRAGMA tpch(4);

PRAGMA tpch(5);

PRAGMA tpch(6);

PRAGMA tpch(7);

PRAGMA tpch(8);

PRAGMA tpch(9);

PRAGMA tpch(10);

PRAGMA tpch(11);

PRAGMA tpch(12);

PRAGMA tpch(13);

PRAGMA tpch(14);

PRAGMA tpch(15);

PRAGMA tpch(16);

PRAGMA tpch(17);

PRAGMA tpch(18);

PRAGMA tpch(19);

PRAGMA tpch(20);

PRAGMA tpch(21);

PRAGMA tpch(22);

We ran the script as follows:

duckdb tpch-sf${SF}.db -f tpch-queries.sql

We did not encounter any crashes, errors or incorrect results. The following table contains the aggregated runtimes:

| Scale factor | Storage | Geometric mean runtime | Total runtime |

|---|---|---|---|

| SF100 | microSD card | 23.8 s | 769.9 s |

| SF100 | NVMe SSD | 11.7 s | 372.3 s |

| SF300 | microSD card | 171.9 s | 4,866.5 s |

| SF300 | NVMe SSD | 55.2 s | 1,561.8 s |

Aggregated Runtimes

For the SF100 dataset, the geometric mean runtimes were 23.8 seconds with the microSD card and 11.7 seconds with the NVMe disk. The latter isn't that far off from the 7.5 seconds we achieved with the Samsung S24 Ultra, which has 8 CPU cores, most of which run at a higher frequency than the Raspberry Pi's cores.

For the SF300 dataset, DuckDB had to spill more to disk due to the limited system memory. This resulted in relatively slow queries for the microSD card setup with a geometric mean of 171.9 seconds. However, switching to the NVMe disk gave a 3× improvement, bringing the geometric mean down to 55.2 seconds.

Individual Query Runtimes

If you are interested in the individual query runtimes, you can find them below.

Query runtimes (in seconds)

| Query | SF100 / microSD | SF100 / NVMe | SF300 / microSD | SF300 / NVMe |

|---|---|---|---|---|

| Q1 | 81.1 | 15.6 | 242.0 | 55.1 |

| Q2 | 7.9 | 2.4 | 27.8 | 7.9 |

| Q3 | 31.5 | 11.8 | 218.9 | 52.7 |

| Q4 | 40.2 | 11.4 | 157.5 | 40.9 |

| Q5 | 32.2 | 12.3 | 215.9 | 54.1 |

| Q6 | 1.6 | 1.4 | 155.9 | 32.7 |

| Q7 | 12.1 | 12.3 | 255.2 | 69.6 |

| Q8 | 25.0 | 19.2 | 298.0 | 77.8 |

| Q9 | 74.0 | 50.1 | 337.2 | 147.7 |

| Q10 | 54.7 | 24.3 | 234.9 | 82.6 |

| Q11 | 7.8 | 2.3 | 34.0 | 14.9 |

| Q12 | 43.1 | 13.6 | 202.9 | 50.5 |

| Q13 | 59.2 | 51.7 | 207.4 | 177.2 |

| Q14 | 33.0 | 9.7 | 269.7 | 59.0 |

| Q15 | 11.1 | 7.1 | 157.2 | 39.6 |

| Q16 | 8.7 | 8.7 | 33.4 | 27.1 |

| Q17 | 8.3 | 7.6 | 249.4 | 66.2 |

| Q18 | 73.9 | 40.9 | 374.7 | 177.1 |

| Q19 | 66.0 | 17.8 | 317.9 | 73.8 |

| Q20 | 22.4 | 8.4 | 273.1 | 56.0 |

| Q21 | 66.9 | 35.2 | 569.5 | 172.5 |

| Q22 | 9.2 | 8.4 | 34.1 | 26.7 |

| Geomean | 23.8 | 11.7 | 171.9 | 55.2 |

| Total | 769.9 | 372.3 | 4,866.5 | 1,561.8 |

Perspective

To put our results into context, we looked at historical TPC-H results, and found that several enterprise solutions from 20 years ago had similar query performance, often reporting more than 60 seconds as the geometric mean of their query runtimes. Back then, these systems – with their software license and maintenance costs factored in – were priced around $300,000! This means that – if you ignore the maintenance aspects –, the “bang for your buck” metric (a.k.a. price–performance ratio) for TPC read queries has increased by around 1,000× over the last 20 years. This is a great demonstration of what the continuous innovation in hardware and software enables in modern systems.

Disclaimer: The results presented here are not official TPC-H results and only include the read queries of TPC-H.

Summary

We showed that you can use DuckDB in a Raspberry Pi setup that costs $300 and runs all queries on the TPC-H SF300 dataset in less than 30 minutes.

We hope you enjoyed this blog post. If you have an interesting DuckDB setup, don't forget to share it with us!

Do you have a cool DuckDB setup? We would like to hear about it! Please post about it on social media or email it to [email protected].